In our four-part blog series "How to feed your Chatbot", we highlight the topics of chatbots and voice assistants. In doing so, we would like to demonstrate how such assistants work in general terms and, of course, whether (and how) such assistants could be used for both service and user information.

The time has finally come: your company now has a clear vision for the future informational concept, and the key use cases for your voice assistant are clearly defined. But now what? You can already find the knowledge to answer this question in-house. The software used to equip voice assistants can also be found on the market; well-known providers include Google Assistant, Amazon Alexa, Microsoft Cortana, IBM Watson and Apple's Siri. You can provide your own assistant on all of these platforms, but who should actually set up the voice assistant, feed it with content and maintain it over the coming years? Should these be the tasks of Technical Editors?

If you get into the topic of "How to Build a Voice Assistant", you'll quickly come across new terms such as Training Phrases, Natural Language Understanding (NLU), Intents, Entities and many more. It will soon become clear that if you're looking for intelligent answers from software, then you first have to make it intelligent. But just feeding text is not enough: the software has to understand and manage what's important in the text and where the relationships are within the information that users are likely to be interested in. It may seem as if a specialised IT company is needed in order to build and maintain the system — but this is not always necessary. Technical Editors often already have some of these necessary skills.

Traditional Editorial Tasks

Even in the first step, the Technical Editor can begin to use his or her core competencies, in order to analyse the target groups and address information in a targeted manner. In the design phase, for example, the target groups are concretised using the Persona method. With the Wizard of Oz Method, targeted interviews, or even surveys, the exact requirements and the language of the future users are then researched. The voice assistant should not lose the user later on, directly after first starting. So what questions are users likely to ask? Which names will be chosen? How "human" should the voice assistant’s answer be? These are important questions that only a well-founded target group analysis can answer.

Ultimately, the necessary content must be researched and created. If the voice assistant is to answer questions directly, rather than simply providing a link to the operating manual, then editorial instincts are required: the answers should be appropriately brief. In addition, texts that are to be spoken are written differently from texts that are to be read.

Last but not least, it also requires a didactic concept of how the knowledge must be prepared for and presented to the user so that it reaches the appropriate target group. In addition to the actual facts, it’s also important how human-like the voice assistant should behave, so that users are actually likely to refer to it.

Support for More Complex Processes

The actual implementation of the voice assistant then depends very much on the platform you wish to use. But the topics are similar everywhere: dialogs including queries and dependencies have to be recognised and defined, names and synonyms have to be stored and much more — all standard subjects within technical editing, which usually doesn’t shy away from the use of more complex software. Depending on the key use case, there is even more: under certain conditions, an export from an existing data source such as a Content Management System is possible. If the information is already available online via appropriate software, then the voice assistant can also act as a translator, translating the user's "natural" question and thus retrieving the desired information from a data source for the user’s consumption.

In principle, Technical Editors can also create voice assistants. However, as you can see, there are some additional skills that need to be learned beforehand. And depending on the complexity of the interfaces and processes, in-depth IT knowledge may also be required.

Once the content is available, it’s a good idea to check the voice assistant regularly, analyse its use, and adapt the functions accordingly. How this can actually work is described in the fourth (and last) part of our blog series, entitled "How to feed your Chatbot".

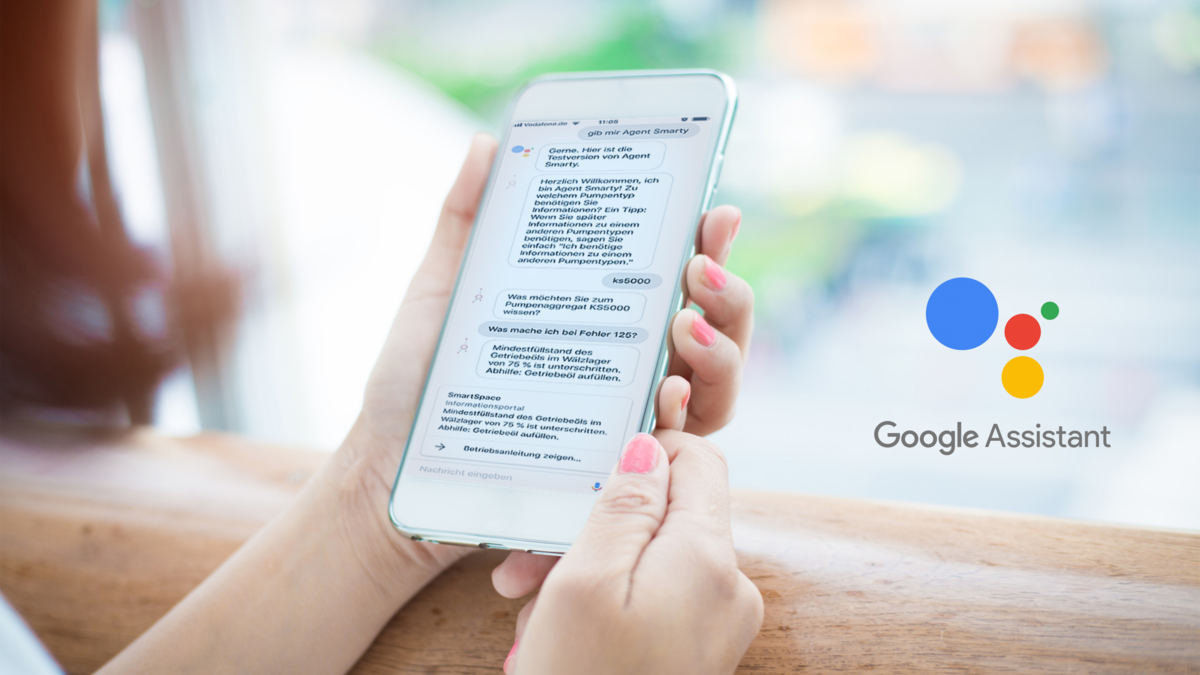

Curious now? Why not try out what such a voice assistant for user information might feel like? You'll need the Google Assistant app and a Google Account. You can use it to test our Agent Smarty voice assistant. He can answer questions whose answers can otherwise also be found in the operating instructions. You can view the operating instructions (after your registration) on our Smart Space.

Start the app and say, "Okay Google, give me Agent Smarty!”

Answer "KS5000" to the Pump-type question.

Now you can ask the voice assistant various questions about the Smarty KS5000 Pump unit. For example: "What do I do in case of error 125?" or "How does the Control unit work?

Have fun trying it out.